How to Write a Good Test Summary Report [Guide]

As businesses get bigger, good test summary reporting is essential to analyze both the bugs and the process associated with testing. But creating an informative, easily understandable, and concise report given the number of different users of the report can be challenging. Test reports are detailed summaries of everything happening in software projects. They tell us where the QA teams are checking the test code, who's doing the testing, and how and when tests are happening. They can also help you keep track of code changes and bugs.

If the team does this part well and on time, the report and feedback become helpful throughout building the software. Below, we’ll spell out best practices explaining how you can produce a worthwhile test summary report.

We can help you to write a good test summary report as a key initiative aligned to your business goals

Recap | What is a test summary report?

A test summary report has the role of a comprehensive documentation of the testing activities conducted throughout the software development life cycle (SDLC).

Skip to template

We include a full list with an example below, but it may include:

- An overview of test cases by pass/fail status

- Individual summaries of test case failures

- Bug reports

- The environment details of the tests

- Outcomes of various testing phases and provides a consolidated view of the software's performance, reliability, and compliance with specified requirements.

The test summary report is instrumental to quality assurance teams, stakeholders, project managers, and decision-makers involved in software development. Its primary objective is to document the full process and methodology undertaken in a testing project. But it also serves as a record of the overall quality status of the application you were testing.

Why is a well-written test summary report so important?

The report further provides insights into how the team addressed and resolved defects, helping evaluate the testing process and the quality of specific features or the entire software application. Here’s why we feel that’s important:

1. Communication and transparency

It provides a clear and concise overview of the testing activities, their outcomes, and any identified issues. This transparency helps everyone in the team understand and work together better, making it easier to decide things based on the information.

2. Software quality evaluation

It does this by documenting test results, defect metrics, and other important details, offering valuable insights into how well the software meets quality standards. Testing results can show severe flaws that could harm the product and postpone its deployment, and good reporting will help developers triage issues and understand how serious bugs came about.

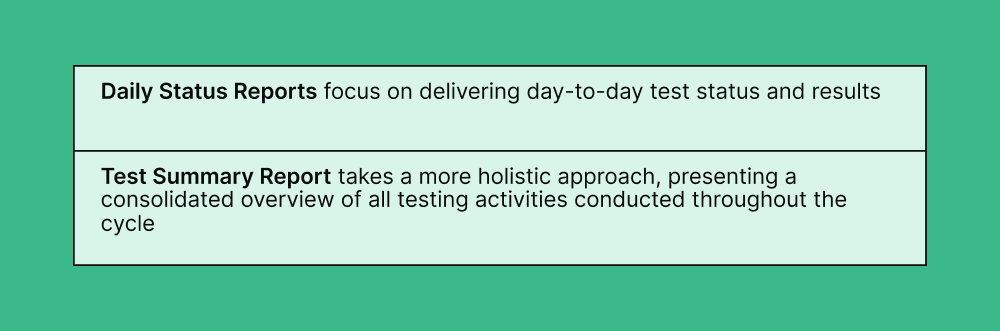

When should you create a test summary report?

The best time for preparing a test summary report is at the end of a testing cycle so it can contain information regarding regression tests. Test reporting provides stakeholders with a comprehensive view of the testing cycle and application health, allowing for corrective action if needed.

What should a test summary report contain?

An informative test summary report should be brief and to the point.The following points are most common, but keep in mind to tailor them to your specific situation:

Key things you should include in a test summary report:

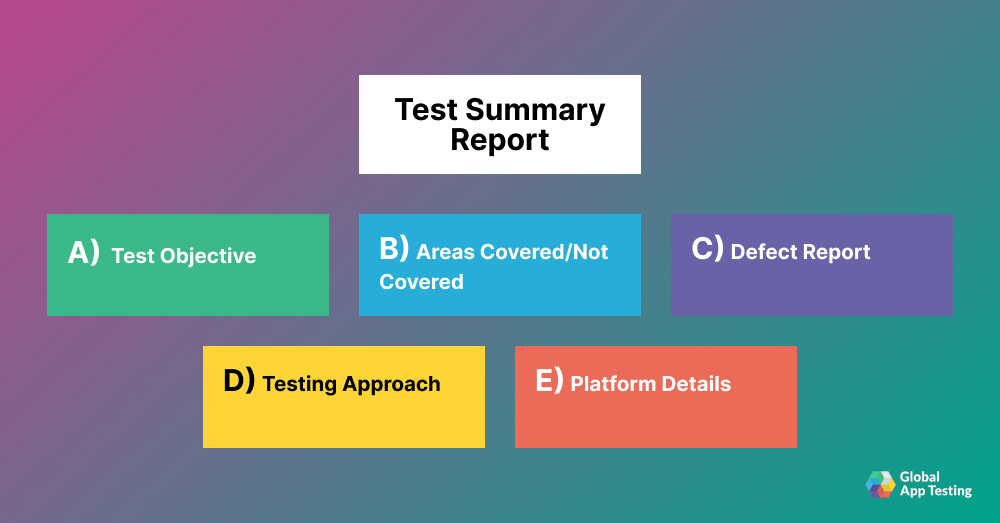

- Test Objective. Clearly state the purpose of the testing, demonstrating clarity in understanding the test object and requirements by the testing team.

- Areas Covered. Include the regions and functionalities of the product subjected to testing. Provide a high-level overview without needing to capture every test scenario in detail.

- Areas Not Covered. It is essential to document product areas skipped in testing. Untested areas can raise concerns at the client's end, so it's crucial to note them and set expectations accordingly. Ensure each point has an associated reason, like limitations in access or device availability.

- Testing Approach. Cover the steps taken and testing approaches adopted, indicating what and how you performed the testing.

- Defect Report. While typically captured in bug reports, including it in the test summary report can provide an additional advantage.

- Platform Details. In the current landscape, products undergo testing across various platforms. Include details of every platform and environment on which you tested the product.

- Overall Summary. Provide feedback on the application's overall status under test. Inform the client about critical issues discovered and their current status, aiding them in estimating the product's readiness for shipping.

How to write a good test summary report In 7 effective steps

Based on conversations with our clients, below are our top seven ways to optimize your test summary reports.

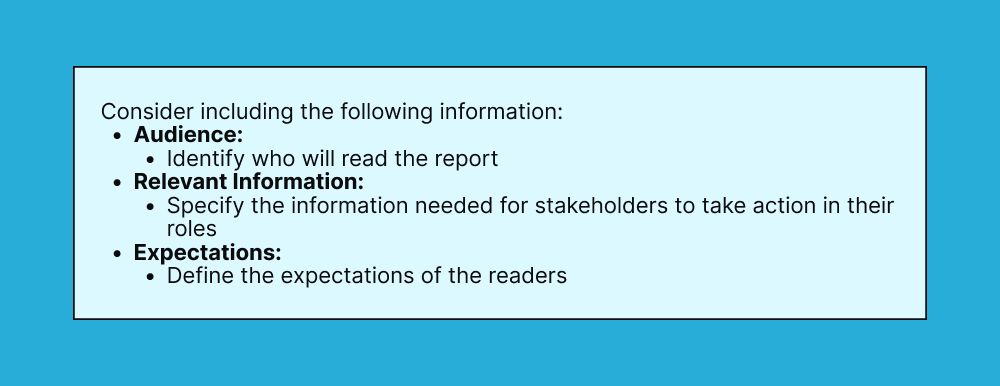

Step 1: Understand the purpose and audience of the test summary report

Before you start creating a report, you need to grasp the purpose and identify the target audience for this report.

Purpose

- Documented end-to-end quality process

- Consolidated overview of test results

- Identified defects

- The overall quality of the software application.

Stakeholders and Target Audience: - Project Managers – oversee the software development project, using the test summary report for comprehensive insights into testing activities. It aids in project planning, resource allocation, and decision-making for release timelines.

- Developers – use test summary reports to understand and fix defects identified during testing, contributing to codebase refinement and software quality improvement. If you’re a Global App Testing customer, developers would use the bug reports and test case summaries in your Global App Testing dashboard.

- Quality Assurance Teams – Directly involved in testing, QA teams use it as a formal record of testing efforts, offering a holistic view of test results and quality metrics.

- Senior Management – Executives and decision-makers need a high-level overview of software quality and readiness for release.

Step 2: Gather and organize relevant information for the test summary report

The second hallmark of a good test summary is easily accessible and relevant information. The most important is as follows:

1. Test results

Collect and present key testing metrics, such as pass/fail rates, to provide an overview of the software's functional and non-functional aspects. If you conduct performance testing, include metrics like response times, throughput, and resource utilization to assess the software's efficiency.

2. Test coverage

Outline the extent to which functional requirements have been tested, emphasizing the comprehensiveness of the test suite.

If applicable, include metrics related to code coverage, indicating the proportion of the codebase exercised during testing.

3. Defects found and fixed

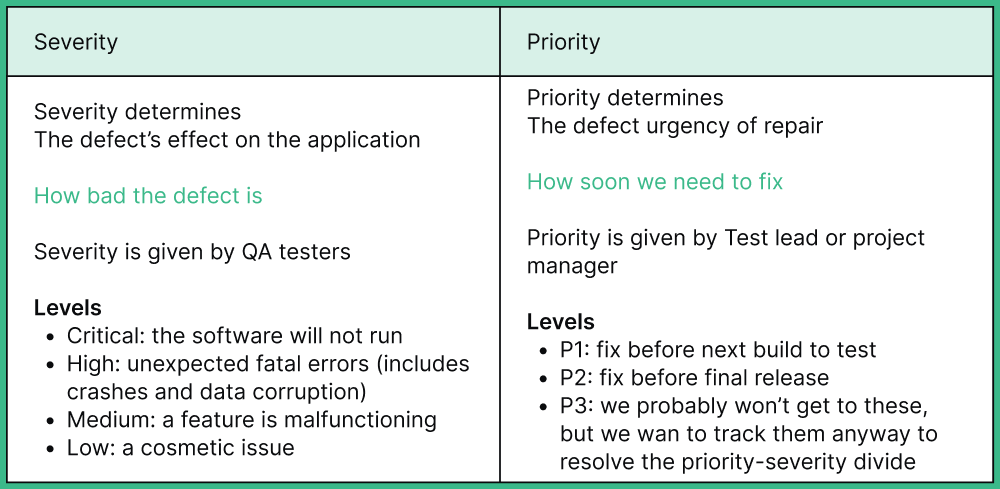

Provide a detailed list of defects discovered during testing, including their severity and priority levels. Highlight the actions taken to address and resolve identified defects, demonstrating the efficacy of the development and testing processes.

%20(1)%20(1)%20(1)%20(1)%20(1)%20(1)%20(1).png?width=1000&height=595&name=Frame%2049%20(2)%20(1)%20(1)%20(1)%20(1)%20(1)%20(1)%20(1).png)

4. Test environment details

Specify the details of the test environment, including hardware configurations, software versions, and network setups (These details are included automatically in the test dashboard by Global App Testing.). Address any challenges or issues encountered in the test environment that may have influenced testing outcomes.

5. Additional considerations

Include information about whether testing adhered to the planned schedule or if there were any deviations, providing insights into project timeline management. Detail the allocation and utilization of testing resources, showcasing the efficiency of resource management.

Step 3: Provide a comprehensive overview of testing activities conducted

In this section, it is essential to thoroughly account the testing methodologies you used and the outcomes of test cases executed. This information gives stakeholders a detailed understanding of the testing process and its effectiveness.

1. Testing methodologies used:

Functional Testing:

- Outline the functional testing methodologies applied, such as unit, integration, and system testing.

- Specify the functional aspects tested and the comprehensiveness of test coverage.

- Summarize the functional testing results, emphasizing key functional metrics and the overall health of the software's features.

Performance Testing: - Specify the types of performance testing conducted (e.g., load testing, stress testing, scalability testing).

- Present performance metrics, including response times, throughput, and resource utilization.

- Discuss any performance-related issues encountered and their impact on the software.

2. Test cases executed:

Test Case Design:

- Describe the approach taken for test case design, whether it followed a scripted, exploratory, or a combination of both methodologies.

- Highlight the traceability of test cases to requirements, ensuring comprehensive coverage of project specifications.

Test Case Execution:

- Provide the total number of test cases executed.

- Provide pass/fail rates for each testing category, offering insights into the stability of different aspects of the software.

Outcomes:

- Offer a detailed breakdown of identified defects, categorizing them by severity and priority.

- Discuss the actions taken to address and resolve defects, demonstrating the efficiency of the defect management process.

Step 4: Summarize key findings and defects encountered during testing

In this step, briefly summarize the major issues you uncovered during the testing process and assign severity levels to defects found. This section aims to provide stakeholders with a focused overview of critical aspects influencing the software's quality.

1. Major issues discovered:

- Highlight any critical incidents or issues that could significantly impact the software's functionality or performance.

- Summarize significant functional deficiencies affecting the user experience or violating specified requirements.

- Assess and articulate the potential business impact of each major issue, considering factors such as user satisfaction and operational continuity.

- Propose or outline strategies for mitigating the impact of these major issues, demonstrating a proactive approach to problem resolution.

2. Severity levels assigned to defects:

- Define what constitutes a defect with high severity, emphasizing the elements that make it critical to the software's functionality or user experience.

- Outline criteria for assigning medium severity to defects, balancing their impact on the software with other contextual factors.

- Establish the characteristics of defects classified as low severity, highlighting their importance in the broader context of software quality.

- Identify any trends in defect severity, indicating areas of the software that may require specific attention or targeted improvement.

Step 5: Include metrics and statistics to support your findings

It is crucial to support conclusions with relevant metrics and statistics. Quantitative measures provide a data-driven view of the testing process, enhancing the report's credibility and completeness.

1. Test coverage metrics:

Percentage of Requirements Covered:

- Clearly outline the methodology used to calculate the percentage of requirements covered by testing.

- Distinguish between coverage of functional and non-functional aspects, if applicable.

- Identify areas where test coverage is notably high or low, offering insights into potential strengths and weaknesses in testing focus.

Traceability Matrix:

- Include a traceability matrix visually representing the linkage between test cases and requirements.

- Evaluate the completeness of traceability, highlighting any gaps that may exist between requirements and executed test cases.

2. Defect density metrics:

- Define the formula used to calculate defect density (number of defects per unit of size, such as lines of code or function points).

- Consider benchmarking defect density against industry standards or previous projects to provide context for assessment.

- Illustrate the trend of defect density throughout the testing process, indicating periods of heightened or reduced defect discovery.

- Compare defect density based on severity levels, allowing stakeholders to focus on areas with higher impact.

Step 6: Conclude with recommendations for future testing improvements

In concluding the Test Summary Report, we shift beyond the current testing state to propose strategies for progress and future improvements:

1. Process Enhancements:

- Conduct a root cause analysis to unveil underlying process gaps.

- Share lessons learned to apply insights from the testing process to future projects.

- Align testing practices with industry standards and adopt new tools or methodologies for enhanced efficiency.

3. Prioritize Continuous Training and Skill Development:

- Assess and address skill gaps within the testing team.

- Encourage cross-training to diversify skill sets and improve overall team capabilities.

4. Additional Tests for Future Cycles:

- Introduce exploratory testing as a complementary approach to scripted testing for uncovering unforeseen issues.

- Enhance security testing efforts by broadening the scope to cover a wider range of potential vulnerabilities.

- Incorporate usability testing to assess the software's user-friendliness and overall user experience.

- Evaluate automation opportunities to improve efficiency and coverage.

Consider automating regression testing to expedite the validation of existing functionalities.

| Key roles | Perception | Probable expectations from report |

| Business Analysts |

Application can withstand a typical peak hour on any day | They would like to see this capacity hold good while varying concurrency and frequency of a typical day for all the items listed for sales |

| Developer | Application may not come across any issues with respect to race conditions and it is thread safe | They would like to see whether concurrency and frequency costs performance or scalability |

| Operations team |

Application resource utilization does not cause issues during peak hours | They would like to see it will be stable and proportional scalable against the generated transactions. It is also important to understand probable first point of failure |

| Enterprise Architect |

Application architecture and its scalability aspects are fine | They would like to see, if application is scalable for future and best distribution of the resources like application instances and capacity |

| Users | Application is fit for use and will have good end-user experience | They would like to have seamless and uninterrupted availability with reasonable response times. |

| Sponsor | Application developed apprehend ROI | They would like to see all defined and implicit requirements (like optimal capacity utilizations) are met. Also analytics and projections for the term. |

Step 6: Exit criteria assessment

You need to evaluate the exit criteria to determine if testing is complete.

It involves:

- Ensuring all planned test cases have been executed successfully.

- Confirming that all critical issues identified during testing have been resolved.

- Action plans are in place for any remaining open issues, with a clear target for resolution in the next release cycle.

Step 7: Approval for Go-Live

At this stage, the testing team assesses whether the application is ready to go live.

The team only grants a green signal if it meets all exit criteria. If any exit criteria are not entirely fulfilled, the report identifies areas of concern that require attention.

The final decision on whether to release the application is then deferred to senior management and other stakeholders after a thorough and informed decision-making process to ensure the application's readiness.

How can Global App Testing help you?

Whether you need comprehensive testing or to overcome a minor hiccup, GAT can help you.

GAT offers complete QA solutions combining crowdtesting and automatic automation to help you release high-quality software wherever you are. This approach involves continuous testing throughout the software development life cycle (SDLC), with a focus on Continuous Integration (CI) and Continuous Delivery (CD). This method boosts release confidence and reduces risks with each release.

Our key features include

- Intelligent QA automation powered by a global crowd of 50,000+ vetted testers spanning 189+ countries.

- Testing your applications with real users on real devices worldwide ensures that your software meets the needs and expectations of diverse user demographics.

- Receiving actionable results within 24–36 hours, allowing for quick identification and resolution of potential issues.

- Running customized tests in as little as 30 minutes provides rapid feedback on your applications' functionality and performance.

- Efficiently shared, recreated, and resolved issues with detailed bug reports facilitated by our "always-on" tester network, available 24/7/365.

- Scaling your QA efforts on demand, deploying testing resources precisely when and where you need them. Analyze or export bugs directly into your development workflow for seamless integration.

Whether you aim to increase release velocity, achieve global QA coverage, or maximize team productivity, our solutions can help you overcome QA bottlenecks and achieve your development goals. So, sign up and arrange a discovery call today!

We can help you drive global growth, better accessibility, and better product quality at every level.

Keep learning

How to Write Better Bug Reports

Automated Functional Testing

How Do You Ensure Quality in the Software You Create?