The Ultimate Guide to Smoke Testing

Did you know that Smoke testing has the highest ROI of any testing you can use to identify bugs? Smoke testing offers a quick and cost-effective way of ensuring that the software's core functions properly work before developers subject it to further testing and release it for public download.

Join our community of 70,000+ testers around the globe and earn money testing websites and apps in your free time.

In this ultimate guide to smoke testing, you will learn:

- What smoke testing is

- When to test

- The difference between sanity, regression, and smoke testing

- Mistakes we've seen clients make in their smoke testing process and best practices to follow.

Let's start!

What is smoke testing?

"Smoke testing" refers to broad but shallow functional testing for the main functionality of a product. It is a software testing method designed to assess the proper functioning of a software application's fundamental features. Its primary objective is identifying and rectifying major issues or defects within the software, ensuring the application's stability for subsequent testing phases.

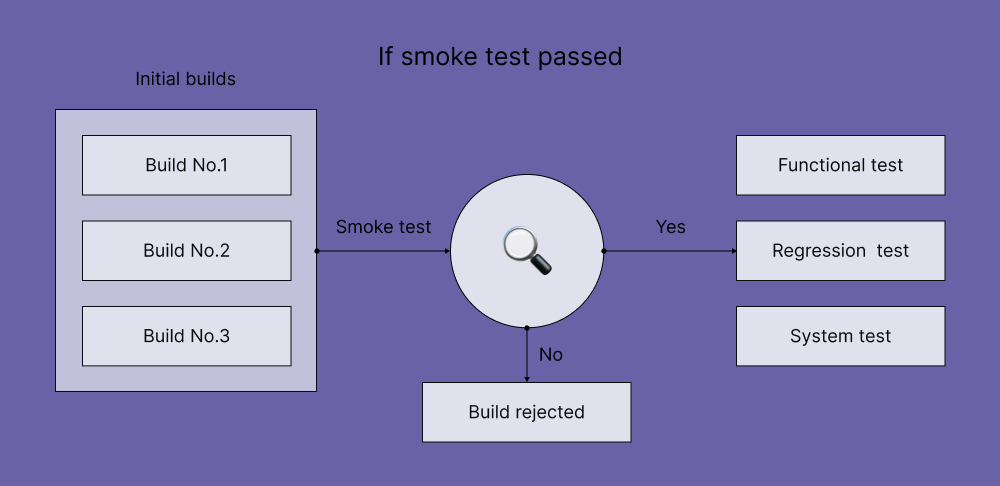

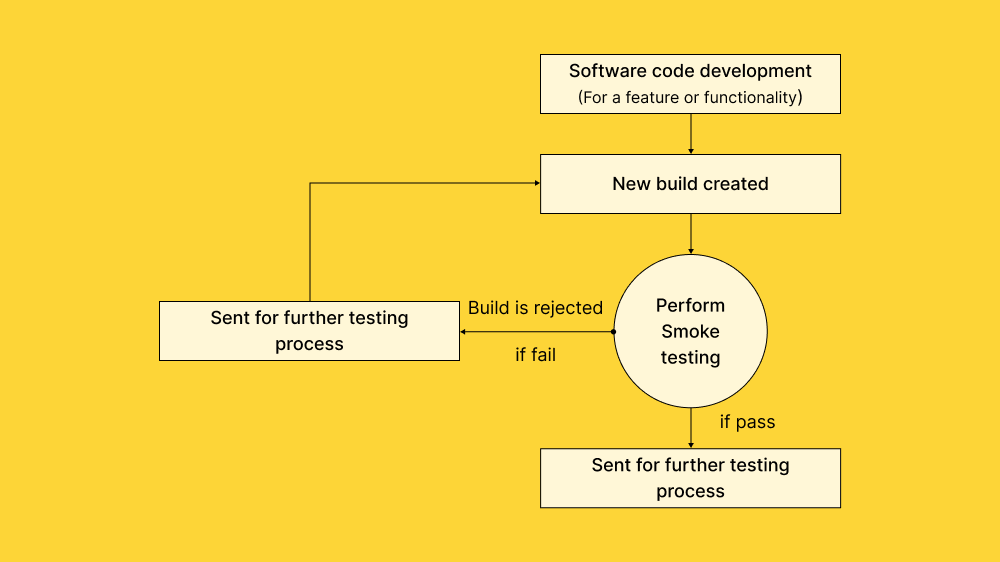

During smoke testing, the software build is deployed to a quality assurance environment, where the testing team verifies the product/application's stability. Successful smoke tests validate that the software can advance to more in-depth testing and eventually be released to the public.

Note: If the smoke test fails, developers can use application logs or screenshots testers provide to pinpoint and address the identified issues.

The goal of smoke testing

The goal of a smoke test is to prevent the needless expense and time waste of deeper tests on a broken product. It is called a smoke test because if there is smoke, there is a fire. The test is meant to catch any "fires" before they become major issues.

For example, in the context of the Titanic, a smoke test would ask if the Titanic is floating. If the test shows that the Titanic is floating, further tests would need to be conducted to verify that it is seaworthy. If the Titanic is not floating, there is no point in conducting additional tests yet because it would be clear that there is a significant problem with the ship's basic functionality.

Where should you use smoke testing?

A smoke test can be used in multiple build environments but is strictly functional. It should try to touch every component of your product ("broad") but must be fast to achieve its goals ("shallow"). For example, you might test that you can set up a bank account and transfer money (for a banking app), buy an item (e-commerce), or make a social post (social software).

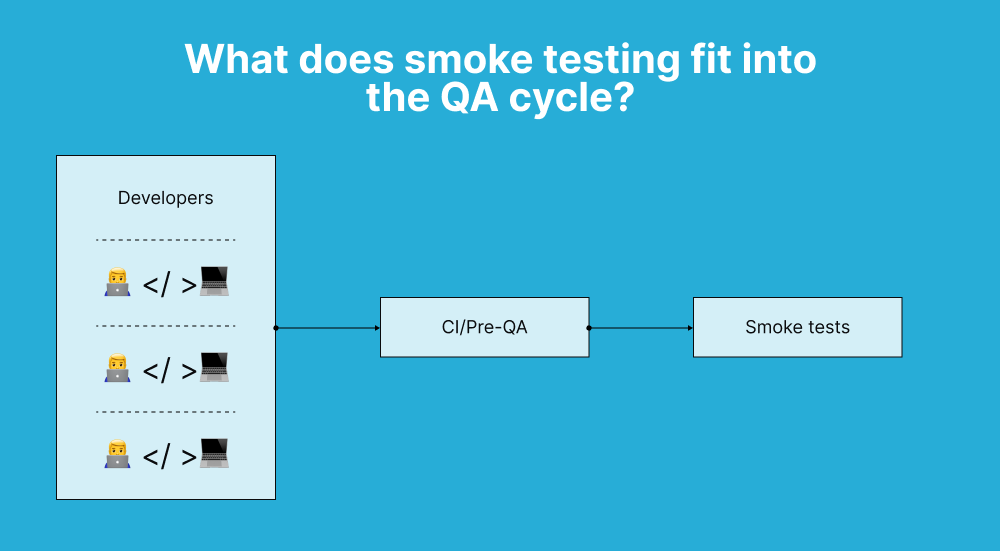

When and who performs smoke testing?

When new features are added to existing software, smoke testing is usually conducted to ensure the system works as intended. In the development environment, developers perform smoke testing to verify the accuracy of the application before releasing the build to the Quality Assurance (QA) team.

Once the build is in the QA environment, QA engineers carry out smoke testing. Each time a new build is introduced, the QA team identifies the primary functionalities in the application for the smoke testing process.

Also, to answer who performs the smoke testing, it can be done by either developers, testers, or a combination of both.

The name origin

The term "smoke testing" has an intriguing origin, with two prominent theories explaining its nomenclature. According to Wikipedia, the term likely originated from the plumbing industry, where smoke was used to test for leaks and cracks in pipe systems. Over time, this term found application in the testing of electronics.

Another theory suggests that "smoke testing" emerged from hardware testing practices, where devices were initially switched on and tested for the presence of smoke emanating from their components. While these theories provide historical context, the contemporary significance of smoke testing lies in its widespread use in the software development process. Although no smoke is involved, the same underlying principles apply to software testing.

Podcast for product managers

We've produced a podcast all about global product growth. Listen to an episode below.

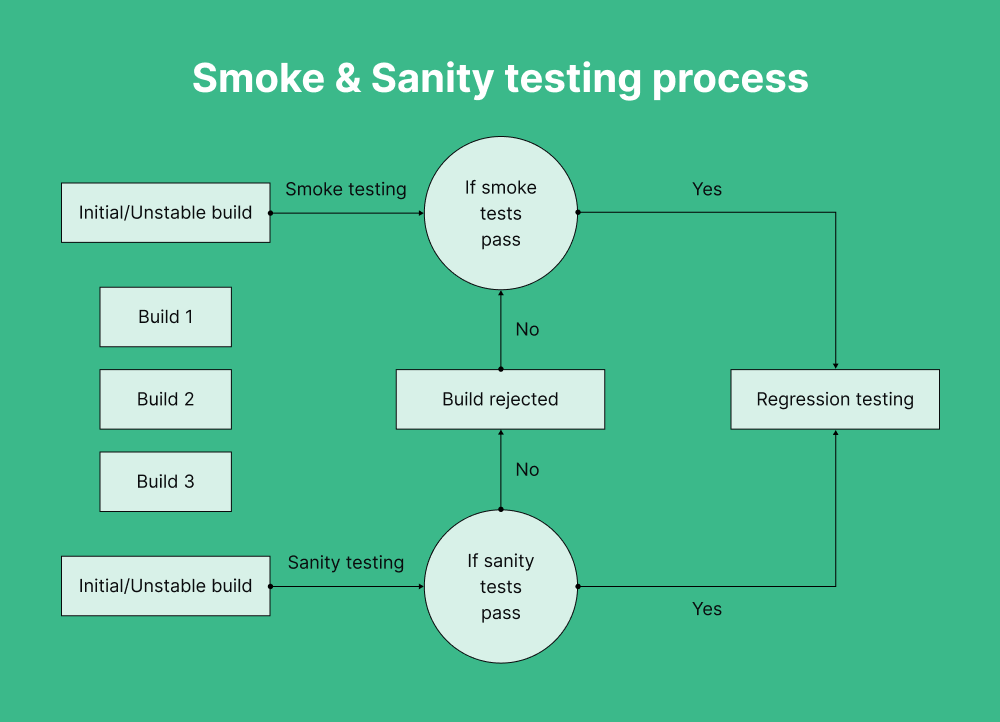

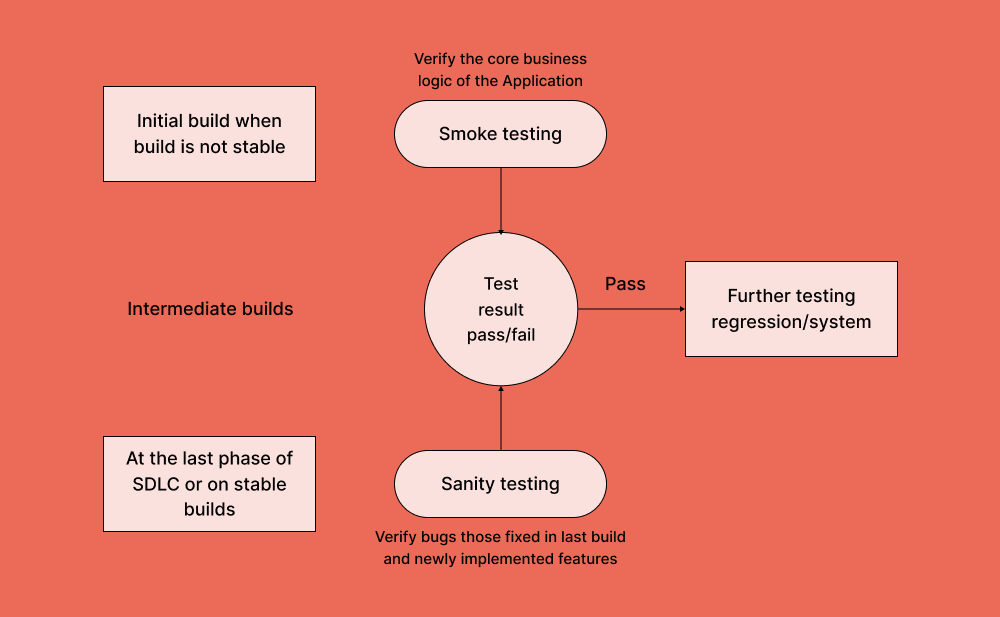

Smoke testing vs. Sanity testing

In industry practice, both sanity and smoke tests may be necessary for software builds, with the smoke test typically performed first, followed by the sanity test. However, due to the combination of test cases, these terms are occasionally used interchangeably, leading to confusion. Understanding the nuances between these testing methods is vital for effective software development and quality assurance.

What is Sanity testing?

Sanity testing is performed to evaluate if the additional modules in an existing software build are functioning as expected and can proceed to the next level of testing.

It is a subset of regression testing, focusing on evaluating the quality of regressions made to the software.

The primary aim is to verify that changes or proposed functionalities align with the plan. Typically performed after successful smoke testing, the focus during sanity testing is on validating functionality rather than conducting detailed testing. It involves selecting test cases that cover important aspects, resulting in wide but shallow testing.

For example, in an e-commerce project, sanity testing would validate specific modules, such as the login and user profile pages, to ensure changes do not impact related functionalities.

Differences between Sanity testing and Smoke testing

- Goal: Smoke testing verifies stability, while sanity testing verifies rationality.

- Performers: Smoke testing is performed by software developers or testers, whereas in sanity testing, testers perform it alone.

- Purpose: Smoke testing verifies critical functionalities, while sanity testing checks new functionalities such as bug fixes.

- Subset category: Smoke testing is a subset of acceptance testing, and sanity testing is a subset of regression testing.

- Documentation: Smoke testing is documented or scripted, while sanity testing is not.

- Scope: Smoke testing verifies the entire system, while sanity testing verifies a specific component.

- Build stability: Smoke testing can be done on stable or unstable builds; sanity testing is conducted on relatively stable builds.

Smoke testing vs. Regression testing

Regression testing might be confused with smoke testing, given its occurrence after each new build. However, the fundamental distinction lies in its purpose. Unlike smoke testing, regression testing delves deeper into the examination process, extending beyond the seamless user experience.

Its primary objective is to ensure that recent changes, such as bug fixes, addition or removal of features/modules, code or configuration alterations, or changes in requirements, do not adversely affect the existing functionality or features of the application.

Differences between Regression testing and Smoke testing

- Goals: Smoke testing ensures the software's primary functions are working correctly. Regression testing ensures that changes or updates have not created unexpected effects in other software parts.

- Scope: Smoke testing is limited in scope, covering only the most basic functions of the software. Regression testing covers a much broader scope, including all areas of the software, even features that may not have been changed.

- Time required: Smoke testing can be completed relatively quickly. Regression testing can take longer as it covers more areas of the software.

- Frequency: Smoke testing is usually done at the start of a software development cycle and sometimes during integration testing. Regression testing is generally done after software

elements have been changed or updated. - Test cases: Smoke testing often uses a limited set of test cases. Regression testing typically uses a large set of test cases.

Smoke testing as part of your test anatomy

Like all testing, your smoke testing quality process is highly specific. It can be traced back to your organization's commercial and operational incentives. Global App Testing generally advocates for higher quality processes and products in our book Leading Quality. (But then, we would say that, wouldn't we?)

In a narrow sense, the moment to run a smoke test is "whenever you want to check the application is working." In a more prescriptive sense, there are key times this crops up during which smoke tests are a sensible investment. Let's take a look at common moments to run smoke tests:

- 1. Before you commit code to a repository – if you don't run your full test suite in a local environment, you should at least make sure you haven't broken anything so severe that it shows up in a smoke test. In the initial pre-commit stage, devs can work on local devices by running a Git test script coupled with a client-side hook.

- 2. Before a large test series, including regression and acceptance testing.

Although an automated smoke test would theoretically save time against any manual test, how much time you'll save is proportional to the scale of the test series you're about to undertake. We undertake manual smoke tests on clients' behalf before a major test series, too – but the saving is more marginal. - 3. Immediately after deployment is a sensible time to undertake a smoke test to ensure that everything is still working properly.

- 4. Any time – as long as your smoke test is rapid (and probably automated), we would generally advocate for a liberal smoke testing policy. Any time you need to test whether your system is working in a big-picture sense, it demands a smoke test.

%20(1)%20(1).png?width=1000&height=565&name=Frame%20185%20(1)%20(1)%20(1).png)

Smoke testing within CI/CD, DevOps, and Agile

Because smoke tests generate faster failures and shorter feedback loops, they have become closely associated with modern programming methodologies such as testing for agile and frameworks that focus intensely on the speed of deployment. With DevOps in particular, while it's not a technical requirement to have automated smoke tests, it is probably a de facto one.

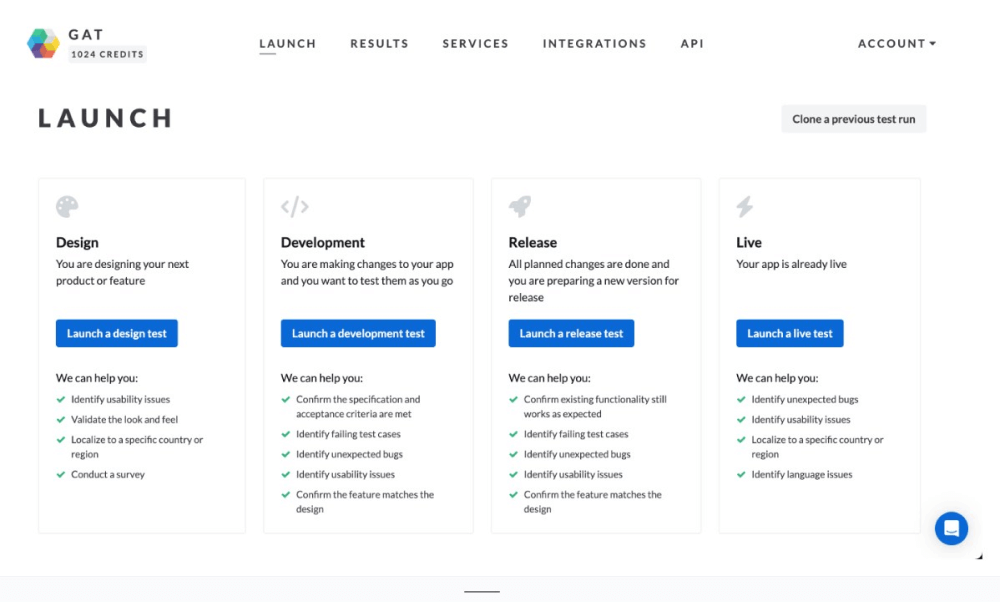

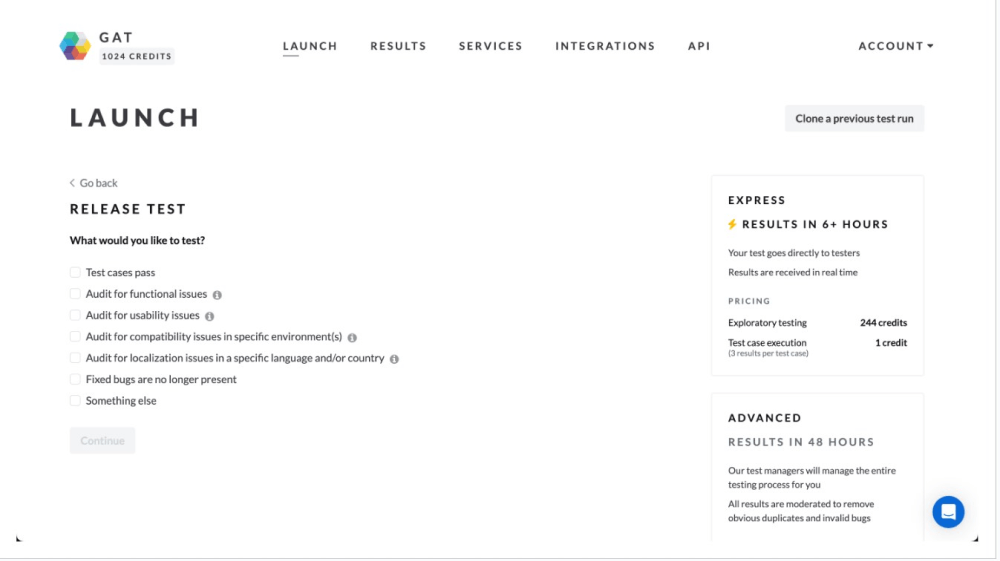

How do I execute a smoke test using Global App Testing?

You can execute any manual test, including smoke tests, by logging into your Global App Testing dashboard, entering or uploading the appropriate test cases, and pressing "launch" to receive your results in as little as 2 hours.

Express tests with Global App Testing are made to offer manual tests with the ease, convenience, and speed of running automated tests. We have also integrated with Jira, TestRail, GitHub, Slack, and Zephyr Squad so you can launch and receive test results where your teams like to work.

For more complex and bespoke testing for our clients, we will often run a quick smoke test as a matter of policy to verify that the test is worth doing.

How to write a suitable test case?

To create effective test cases, follow these steps:

1. Identify test areas: Enumerate all the product areas suitable for a smoke test.

2. Define core functionality: Break down the core functionality of each identified area into step-by-step processes.

3. Write test cases: Document the identified steps as test cases.

4. Execute tests properly: Implement the tests in a structured manner, writing "pass/fail" next to each step.

5. Avoid Ad Hoc play testing: Refrain from ad hoc play testing and opt for a systematic approach to ensure the test's accuracy.

By executing these steps, you enhance the likelihood of your tests working as intended, preventing the temptation to cut corners in the testing process.

Pro tip

Global App Testing can offer tests turned around in as quickly as 6 hours, designed to support you by taking edge cases and executing tests in your automation test queue queue out of the hands of your quality engineers to give you the bandwidth to automate more tests.

Smoke testing best practices and failure categories

Here's our advice on what to do when you're smoke testing:

1. This is the highest ROI of “automating” any test – you should probably automate it

Because of the frequency with which you'll undertake smoke testing, the savings of automating your smoke tests are the greatest of all the savings we'd associate with the automation of any test. We're a manual testing solution, so we often have to make judgments about when our clients would be better off with an automated test (or even give our clients the bandwidth to automate more of their testing suite) – but a smoke test is usually better off done by a program.

2. Run smoke tests frequently

Once you have automated your smoke test, especially if some parts of your testing suite require manual or time-consuming work, you can benefit from testing more frequently.

We suggest running the smoke test at every stage of the production environment, from before you commit until after deployment. If your smoke test is manual, consider automating it to ensure more frequent and efficient testing.

3. Ensure the whole system is touched

One failure mode of building a smoke test is that only part of the system gets tested by the test. If your smoke test is not sufficiently broad, it will fail to find a major fault in some modules. Ensure that your test cases touch every function of the system without looking into the complex instances of each function. If you have a modular system, you should ensure that the relationship between modules is tested in addition to the modules themselves.

Smoke testing failure categories

1. Lack of focus

It is common for smoke tests to be executed poorly when the test's primary purpose is forgotten. The objective of the test is to save time, not to discover every bug extensively. Hence, excessive depth is the most common failure category of smoke tests. This is because when a thorough test is conflated with a quick check, the test suite is likelier to fail in both categories. Additionally, suppose every software module is not tested. In that case, it may result in insufficient testing of the product and tests that are not cost-effective in terms of time or money.

2. Failure to identify whether it will save time

Failure to (correctly) identify when and whether a smoke test will save your organization time is the second most common category of failure. Probably, you are smoke testing less frequently than optimal, and you could save your organization more time by automating smoke tests and testing more often. But in some cases, you may be testing pointlessly, where a smoke test will not save your organization any time.

Get more advice straight to your inbox

If you've enjoyed this article, we write loads more like it. You can sign up for our content essentials below and get it straight to your inbox – all of our tips about how and when to test, what to test, and how to test to drive quality in your organization.

We can help you drive localization as a key initiative aligned to your business goals

FAQ

What happens if Smoke testing fails?

If smoke testing fails, it indicates significant issues in the basic functionalities. Developers may need to investigate and fix the problems before further testing. Failed smoke tests may prevent the software build from progressing in the development cycle.

Is Smoke testing automated or manual?

Smoke testing can be both automated and manual. Automated smoke tests are scripted and executed automatically, while manual smoke tests involve testers manually checking essential functionalities.

Can Smoke testing replace comprehensive testing?

No, smoke testing is not a replacement for comprehensive testing. It is a quick check to ensure the basic functionalities are working. More thorough testing, such as regression testing and integration testing, is still necessary for comprehensive software quality assurance.

Keep learning

Remote Working has Changed Software Development Forever. Here’s Why:

App Users Today Have No Time For Poor Quality (We Asked Them)

How Flip cut their regression test duration by 1.5 weeks